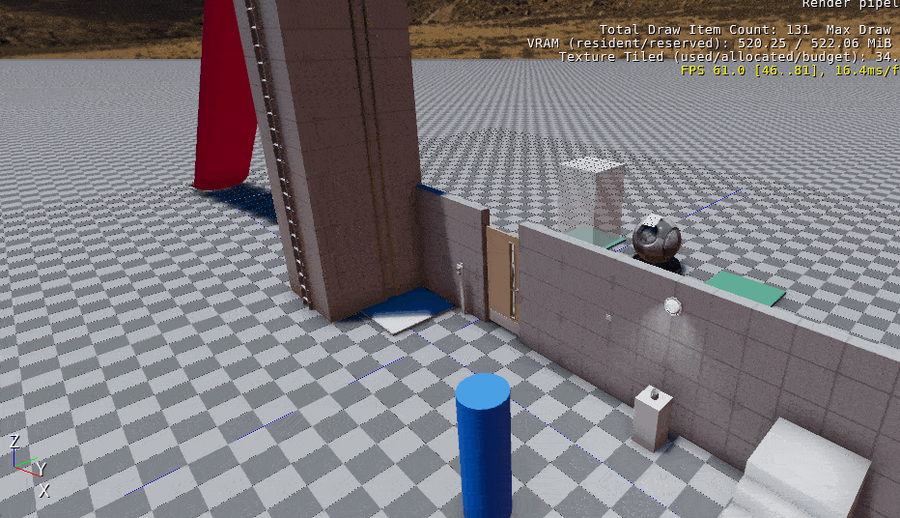

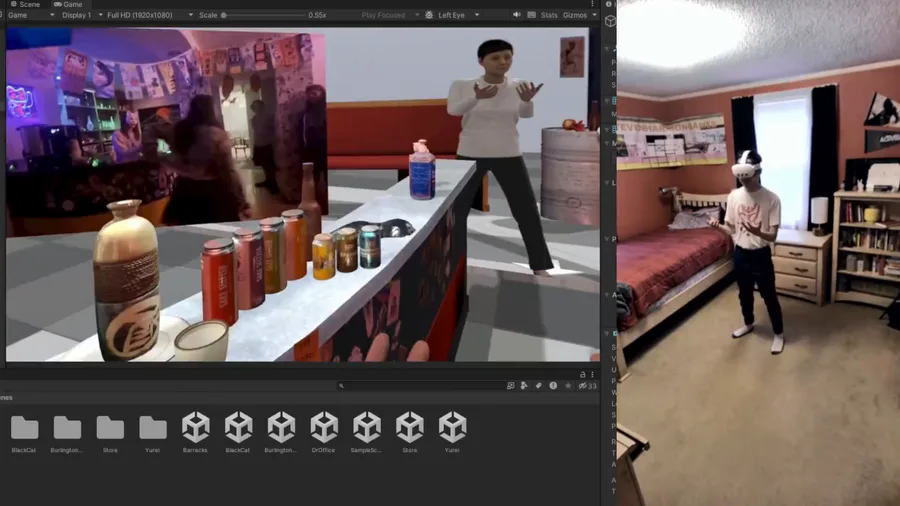

I used a mocap volume with Opti-track cameras, cleaned the data in Motive, then went between Unity and Motion Builder to retarget to the reference footage and scale the set to the gaff tape setup I had on the shoot day. I referenced Unity's boids example to make the intro bird like title animation and iTween for the throwables fluttering away procedural animation. The VR was incredibly simple with Oculus's OVR starter assets, but I never figured out how to debug during development for the Quest. I don't own my own Quest or RiftS (borrowed one for a week to make this) so I didn't feel like buying an expensive cable for the link. Therefore, I couldn't test it in editor and had to export, duplicate, and play remotely each time which was a bit of a pain. Any suggestions on that?

If you were curious about why there's no facial capture in the end product, I had a rig of phonemes and expressions set up like so, but Faceware had complications with the sheer amount of data (30 mins) and my last ditch effort to create a spectrogram from the voice over audio and determine phonemes visually was... a failure and I ran out of time and had to cut it. However, I did find an asset on the asset store called lipsync pro that does just that but I didn't have enough time to fully implement before the due date, so the facial animation was scrapped.

Overall though, I'm happy with the final product, it gets the feeling of the experience across and that's all I wanted from this project.

0 comments