Hey guys!

Yesterday I (accidentally) updated the Shader Graph from version 2.0.6-preview to 3.0.0-preview and had to realize that all my ShaderGraph-shaders are destroyed!

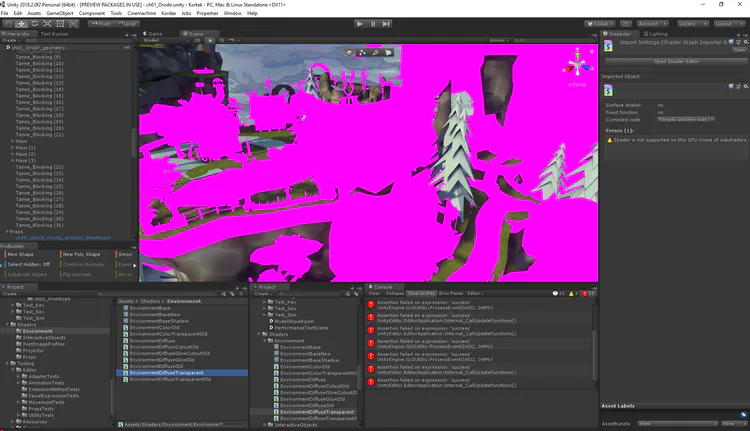

The error message: Shader is not supported on this GPU

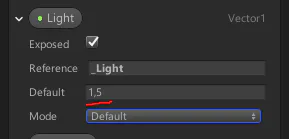

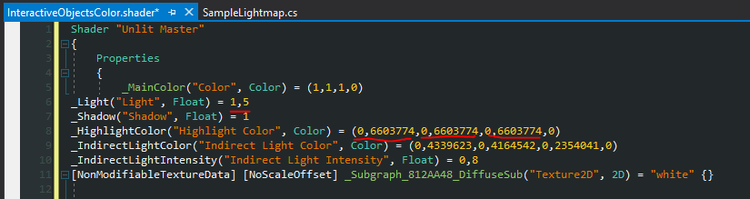

After hours of searching for the cause of the issue, I noticed that Unity automatically transforms my floating-point inputs separated by a dot (e.g. “1.23753”) into values separated by a comma (“1,23753”).

Of course, when the Graph is translated into code, the result is crap. Vectors with four elements (e.g. (1.2, 1.2, 1.2, 1)) are transformed into Vectors with more than four elements (1,2, 1,2, 1,2, 1) which can’t work obviously.

The solution

The solution is quite easy: avoid using floating point values in your properties list (values baked directly into the shader are translated correctly). Set your properties to 1 instead of 0.8 and set your colors to FFFFFF or 000000 instead of A8A8A8 etc.

Of course, the problem that comes with this solution is, that you can’t set appropriate default values for your shaders. You have to decide between the reliability of your coded shaders and the comfort of the shader graph.

Or even better: write a tool, which automatically sets property values for all materials using a specific shader. Then you’re able to set the default integer values in the shader graph and reassign those properties for all materials with appropriate floating point values.

Thanks for reading!

0 comments